[see blog entry 7/7/11 regarding intentional font issues in this post]

I sat through several Technical Review Boards (TRB) recently. After approximately 3 hours of review, each degraded into a session of writing the documents rather than reviewing them. None were of a quality suitable for publication. Several documents were unavailable beyond a prominent “draft� notice. What’s going on in our profession of Test & Evaluation engineering?

Two concomitant events give me a clue. First, I read a report published in the June 2004 issue of Control Engineering stating in a survey on computing practices of new engineers, that MathCAD and Matlab are used “much more heavily in academia than in industry.� The editor pondered why.

In the latest TRB, a 4:30pm discussion devolved into an analysis of why the author should list data products beyond an average and standard deviation. The author’s contention was that the minimum is promised, but for the actual test report, he would generate appropriate additional graphs or charts based on the content of the data.

Considering these issues in close proximity to each other made me realize it’s an issue of professional curiosity vs. business sense. A student uses Matlab or MathCAD because either program is an excellent experimentation environment – they are mathematical tools for searching and documenting invasive understanding after significant trial and error. In contrast, business communication is almost entirely defensive communication to others advocating or justifying a claim. Technical profoundness never sells a proposal or justifies a budget. So people who once spent hours pouring over Matlab now realize success with a half dozen bullet lists in a PowerPoint brief.

In college, it was an exercise in studying the tool and the world. In the business/ government/ industrial world, it’s an exercise in using the tool efficiently and getting onto the next concern.

In the case of the recent test plan that was on my mind, the author wanted to preserve the freedom to experiment with data and determine the best data products in context. This free intellectual pursuit is desired by two groups of people, at opposite extremes of the experience:

1) Increasingly, acquisition programs host a number of Ph.D.-type personalities that desire to minimally commit to test procedures because they want to experiment with integrated hardware until it’s optimized. They desire experimental data, not test data. Conflicts result when the government wants constrained tests, and a TSPR (Total System Performance Contract) contractor wants freedom.

2) The matrixed engineer who doesn’t understand subtle end-game negotiation of a contract sees the data product section of a test plan as a constraining straight jacket. The only escape is to document as little as possible, and learn/respond as the data rolls in.

If it’s an incomplete document, a review board should give it 3 hours, and go home. Instead, we destroy until the author is beaten. If it was an experienced person, they go to a different program. If it was a new person, we’ve killed one of our own and demotivated them from a career.

If the only person who succeeds in this environment is the un-curious, middle-of-the-road performer, we have a situation that is broke. What can be different?

- Desire Peer Review

- Be nice

- Hire the right kind of person

- Respect Other’s Time

- Version control

- Turn times on reviews (duration and deadline)

- Do not look to justify your job at another’s meeting

- Reviews must have a scope and granularity appropriate for the presented document. A draft does not get a detailed release.

- Replace Event-driven TRB & SRB processes.

Perhaps Web-based logging or Wiki technology can help:

- Public visibility (allowed peers)

- Documented cross-referenced comments (not just email round table with death by attachments) – forces intellectually original contribution.

- Standard database archive and search environment (MySQL or SQL Server).

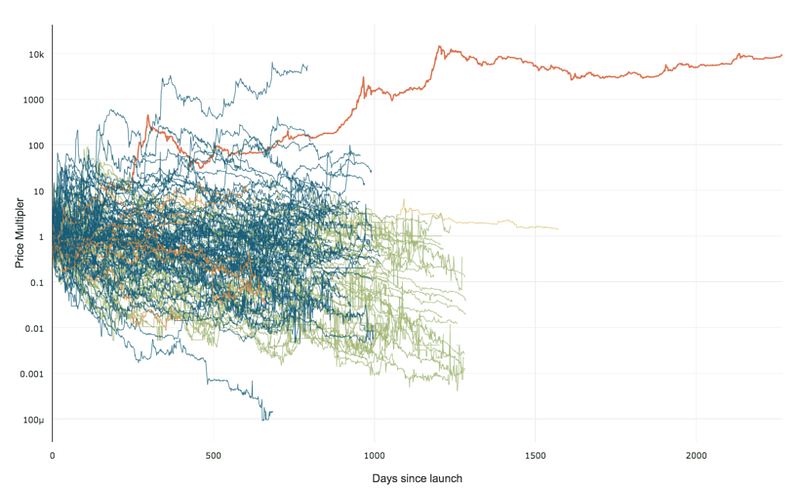

99% of ICOs Will Fail

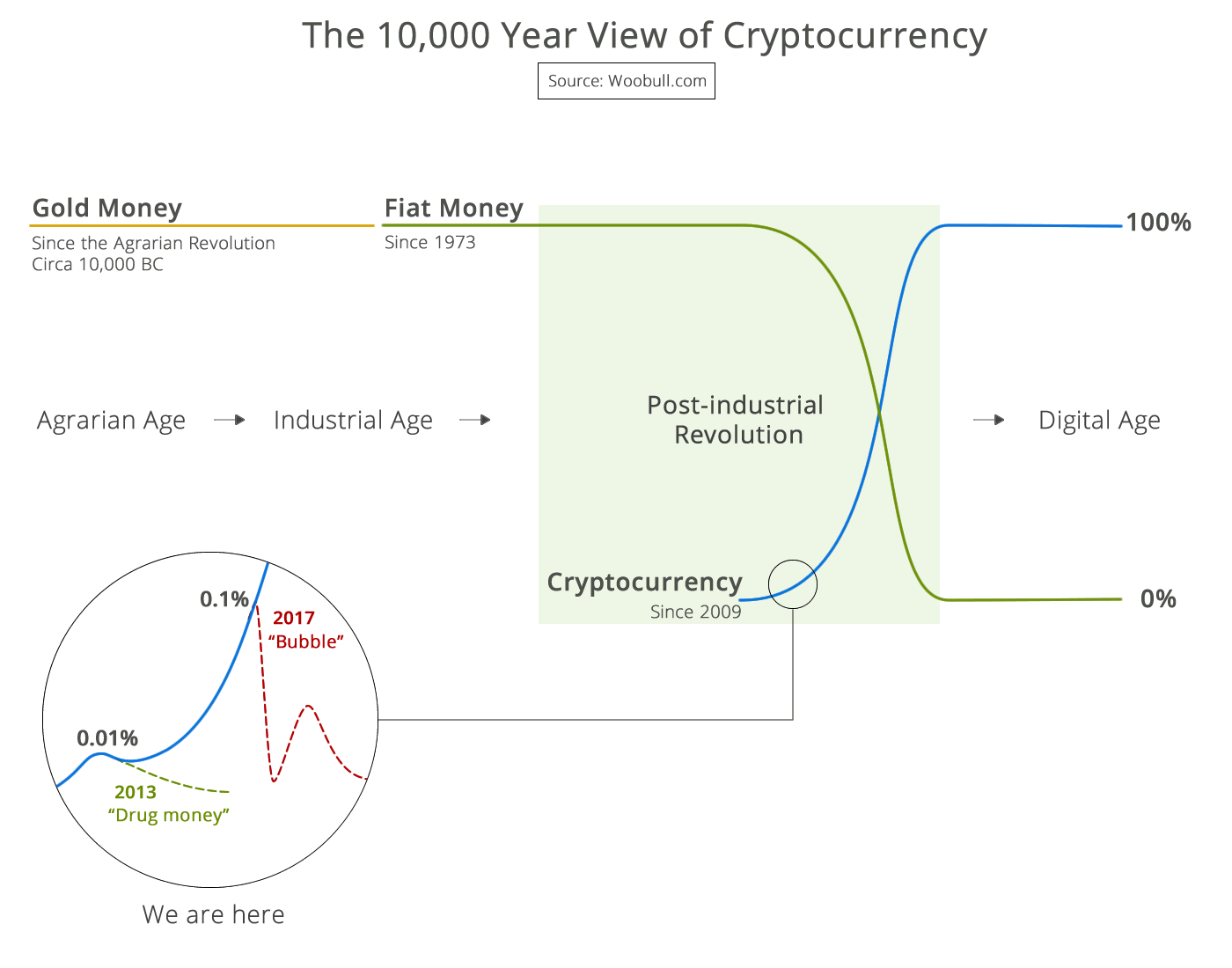

99% of ICOs Will Fail The 10,000 year view of cryptocurrency

The 10,000 year view of cryptocurrency